About Me

I’m currently an ML Research Engineer at Apple. Prior to joining Apple, I received my PhD from Washington State University, where I worked at the Embedded Machine Intelligence Lab (EMIL) under the supervision of Dr. Hassan Ghasemzadeh. My current research interests focus on the real-world challenges of working with Large Language Models (LLMs), particularly in areas of inference efficiency and reasoning capability.

Interests

- Large Language Models

- Formal Reasoning

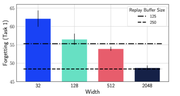

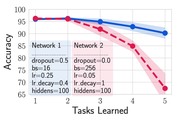

- Continual Learning

- Inference Optimization

Education

Ph.D. in Computer Science (Artificial Intelligence), 2018-2022

Washington State University

M.Sc. in Computer Science (Artificial Intelligence), 2018-2020

Washington State University

B.Sc. in Computer Engineering (Information Technology), 2013-2018

University of Tehran

Experience

Machine Learning Research Engineer

Research Scientist Intern

Graduate Research Assistant

Machine Learning Engineer

Selected Publications

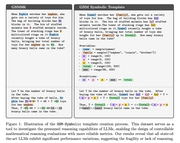

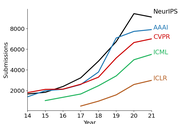

The 62nd Annual Meeting of the Association for Computational Linguistics (ACL), 2024

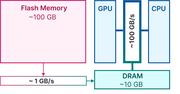

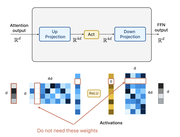

International Conference on Learning Representations (ICLR), 2024 [Oral]

International Conference on Machine Learning (ICML), 2022

International Conference on Learning Representations (ICLR), 2021