The Bitter AGI Lesson: Intuition & Heuristic = Statistics

featured.png

featured.pngOver the past year, I’ve worked with language models of various sizes—from small to ridiculously large. Initially, I was often surprised by their behavior. Sometimes they seemed incredibly smart; other times, astonishingly dumb. I’m not the only one who has worked closely with these models. I’ve read all kinds of opinions. Some people claim to “feel the AGI” or “see some sparks”, while others think these models are stupid and we are “hitting a wall”. And of course, there are the AGI doomers or super-alignment cults. I’ve seen them all! Being exposed to all of these perspectives, I’ve developed my own belief system of the matter and today, I’m going to share my thoughts with you.

Search & Reasoning: Components of Intelligence

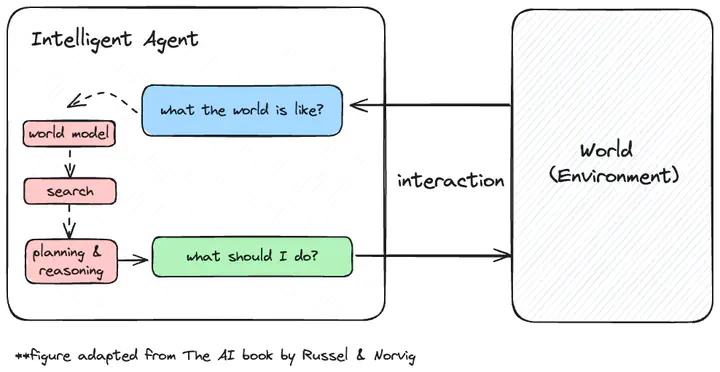

An intelligent system should have at least two major components[^1]: (1) search, and (2) reasoning. Obviously, this is a very abstract yet classic AI formulation, and depending on what you have for options 1 and 2, you may get anything from a calculator to a chess program to a super-intelligent creature. But very crudely, the search component tries to look for solutions in the vast space of possible candidates, while the reasoning part tries to do the verification, planning, and formulation of an answer/action.

For example, given infinite time and compute to solve a single problem, one could run a simple program to find an answer to the problem: exhaustive search over all possible English tokens and ask if that’s the answer (for simplicity, we’re assuming the answer consists of only a limited number of English tokens/words). But obviously, that’s neither possible, feasible, nor desirable to do. Hence, we need to be much more efficient with our search algorithm.

But how do humans do this? Most of the time, we speak and think intuitively. In other words, intuition is the heuristic of our search algorithm. We often say someone has a better intuition when they have a better [heuristic in their] search algorithm and can find ideas (i.e., candidate for an answer) much faster.

Intuition = Heuristic in Search = Statistics

What is really striking about the above AI formulation is how we humans were always wrong about the hard problem we need to solve to have a capable AGI system!

In science fiction movies, humans portrayed AI robots as creatures with immense intelligence capabilities that can do complex reasoning but are unable to perform intuitive tasks, unable to “feel” anything. They can’t possibly fathom the notion of intuition, creativity, or art. It wasn’t just our sci-fi writers who made such assumptions. If you asked an AI expert which one is harder, “creating art/making a joke” vs “performing logical reasoning,” I believe almost all of them would pick the first option. We thought intuition was a harder problem to solve. How can we even define intuition? How do we measure intuition? Let alone build an agent capable of doing these. This seemd impossible.

But we were wrong! Today’s foundation models have much better intuition than you and I will ever have. They can create original and beautiful paintings and soul-touching music. Many would argue that these models don’t understand the world at all and merely mimic data or predict the next token. I believe these critics are in the “denial phase” and today’s systems are very intuitive. That’s why it’s easy to integrate them to current products!

I might be painful to accept that the answer to the decades-old, billion-dollar AI research question of “how to build an AI agent with intuition and creativity” is “let it learn to fit the data distribution”. But isn’t this what humans do as well? what we call intuition often comes from experience, which is learning from data (Although admittedly, humans are much more sample-efficient learners. But that’s a topic for another day as we are not talking about the best learning algorithm today).

Final Thoughts: What to do next?

While the intuition problem is solved, the (formal) planning & reasoning part is still unsolved. This remaining missing piece of the AGI puzzle is what makes me excited about the future, as I may live to see the answer to this question and hopefully have a chance to make a scientific contribution. I will share my detailed thoughts on the matter in another post, but I believe this is the problem everyone should work on right now.

---

[^1] Obviously, I’m focusing on intelligence and not much on other parts such as sensors/perception of the environment, etc.