LabelMerger: Learning Activities in Uncontrolled Environments

Abstract

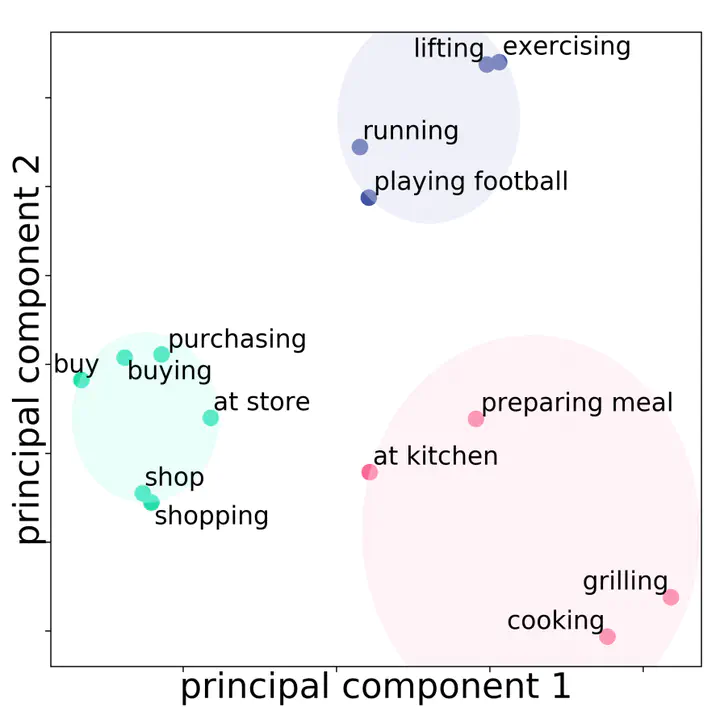

While inferring human activities from sensors embedded in mobile devices using machine learning algorithms has been studied, current research relies primarily on sensor data that are collected in controlled settings and/or with healthy individuals. Currently, there exists a gap in research about how to design activity recognition models based on sensor data collected with chronically ill individuals and in free-living environments. In this paper, we focus on a situation where free-living activity data are collected continuously, activity vocabulary (i.e., class labels) are not known as a priori, and sensor data are annotated by end-users through an active learning process. By analyzing sensor data collected in a clinical study involving patients with cardiovascular disease, we demonstrate significant challenges that arise while inferring physical activities in uncontrolled environments. In particular, we observe that activity labels that are distinct in syntax can refer to semantically-identical behaviors, resulting in a sparse label space. To construct a meaningful label space, we propose LabelMerger, a framework for restructuring the label space created through active learning in uncontrolled environments in preparation for training activity recognition models. LabelMerger combines semantic meaning of activity labels with physical attributes of the activities (i.e., domain knowledge) to generate a flexible and meaningful representation of the labels.Specifically, our approach merges labels using both word embedding techniques from the natural language processing and activity intensity from physical activity research. We show that the new representation of the sensor data obtained by LabelMerger results in more accurate activity recognition models compare to the case where original label space is used to learn recognition models.