1

The 62nd Annual Meeting of the Association for Computational Linguistics (ACL), 2024

International Conference on Learning Representations (ICLR), 2024 [Oral]

International Conference on Machine Learning (ICML), 2022

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2021

International Conference on Learning Representations (ICLR), 2021

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2020

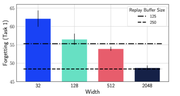

Runner-up award at CVPR'20 Workshop on Continual Learning in Computer Vision

International Joint Conference on Artificial Intelligence (IJCAI), 2020

AAI Conference on Artificial Intelligence (AAAI), 2020